What are Azure Container Apps

Please note* as of writing Azure Container Apps is a preview feature. It is not ready for production applications.

Do you love containers but don’t have the time to manage your own Kubernetes platform? Azure Containers Apps is for you.

We all love containers their small, portable, and run anywhere. Azure Containers Apps are great if you just want to run some containers but that’s just the beginning. With Container Apps, you enjoy the benefits of running containers while you leave behind the concerns of manual cloud infrastructure configuration and complex container orchestrators.

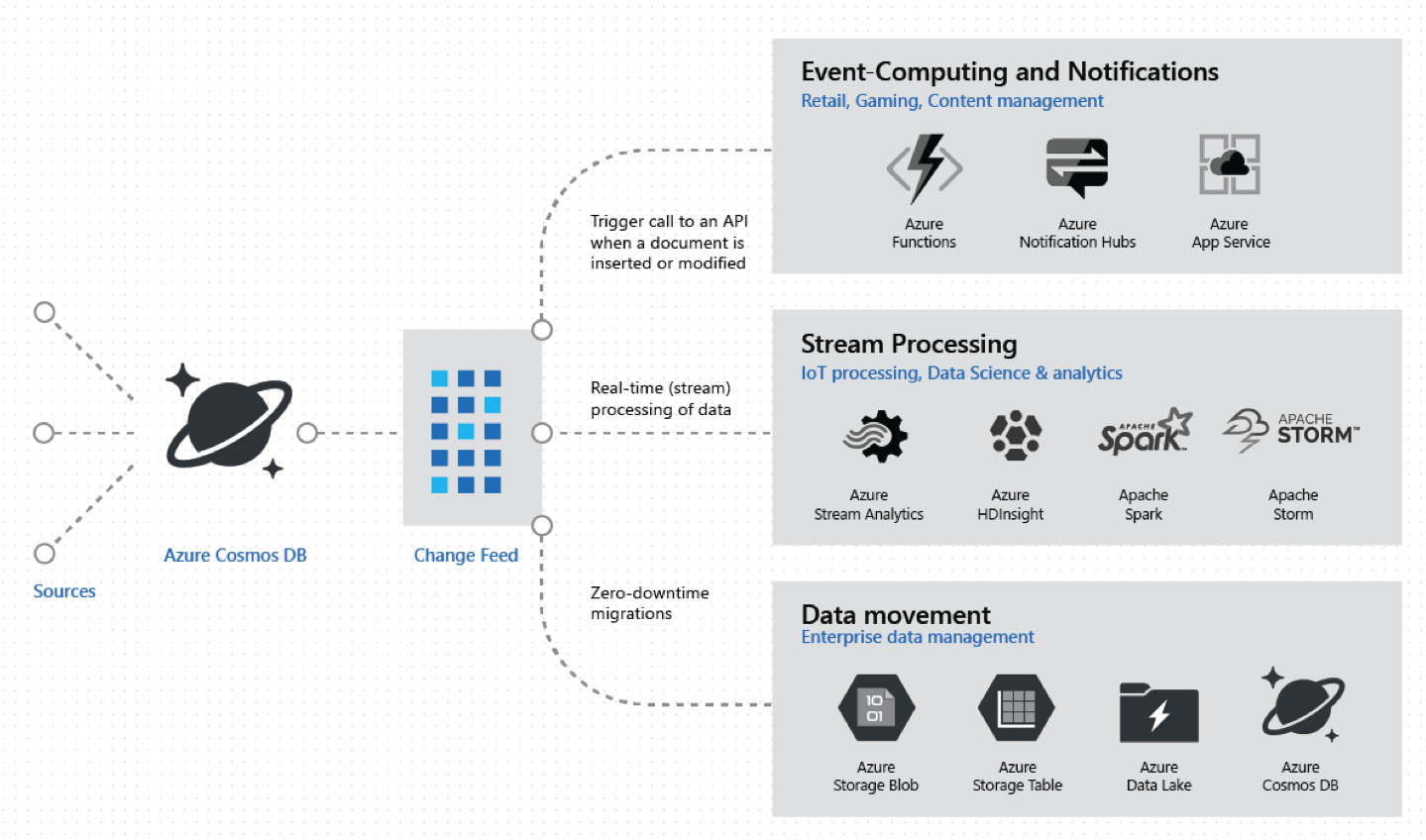

Azure Container Apps is serverless, so you just need to worry about your app code. You can use Azure Container Apps for public-facing endpoints, background processing apps, event-driven processing, and running microservices.

Azure Container Apps is built on top of Kubernetes which means some of the open-source foundations are available to you, like Dapr and KEDA. Something to note* is that you do not have access to K8s commands in Azure Containers Apps. As we love to do in modern architectures we can support different technologies, like node and .net at the same time. We can support HTTP, Microservices communication, event processing and background tasks. With Container Apps many of the requirements you have for Modern apps are built-in like robust auto-scaling, ingress, versioning, configuration, and observability. With Azure Container Apps you can use all the good parts of K8s without the hard parts.

Where does this fit in Azure?

In Azure, we already have AKS, ACI, Web Apps for containers, and Service Fabric. Why do we need something new? In a very short summary:

-Azure Kubernetes Service (AKS) is a fully managed Kubernetes option in Azure, the full cluster is inside your subscription which means you also have power, control, and responsibility. Eg. you still need to maintain the cluster with upgrades and monitoring.

-Container Instances allow you to run containers without running servers. It’s a lower-level building block but concepts like scale, load balancing, and certificates are not provided with ACI containers.

-Web Apps for containers (Azure App Service) is fully managed hosting for web applications run from code or containers. It optimised for running web applications and not for the same scenarios Container Apps eg Microservices.

So Azure Container Apps are designed for when you want to multiple containers, with K8s like funtionality without the hassle of managing K8s.

You can find more details on the differences here.

As you can see Azure Container Apps looks like a compelling offer for many Azure microservices scenarios. At XAM in our Azure Consulting practice, we are extremely excited about the future of Azure Container Apps.

Getting Started

The great thing about Azure Containers Apps is that it’s really easy to get started. In this post we are going to assume you have an Azure subscription.

If you haven’t already you need to install the Azure cli.

brew update && brew install azure-cliOnce you’ve completed the installation then you can login to Azure

az loginThen you can easily install the Azure Container Apps cli

az extension add \

--source https://workerappscliextension.blob.core.windows.net/azure-cli-extension/containerapp-0.2.2-py2.py3-none-any.whl

In order to run a container app, we need both a log analytics workspace and a container apps environment. So first let’s setup the log analytics workspace.

az monitor log-analytics workspace create \

--resource-group InternalProjects \

--workspace-name demoproject-logs Then we can get the workspace client id and secret of the workspace.

LOG_ANALYTICS_WORKSPACE_CLIENT_ID=`az monitor log-analytics workspace show --query customerId -g InternalProjects -n demoproject-logs -o tsv | tr -d '[:space:]'`

LOG_ANALYTICS_WORKSPACE_CLIENT_SECRET=`az monitor log-analytics workspace get-shared-keys --query primarySharedKey -g InternalProjects -n demoproject-logs -o tsv | tr -d '[:space:]'`Now that we have a log analytics workspace we can create our Azure Container Apps environment. An Environment is a secure boundary around groups of container apps.

az containerapp env create \

--name containerappsdemo \

--resource-group InternalProjects\

--logs-workspace-id $LOG_ANALYTICS_WORKSPACE_CLIENT_ID \

--logs-workspace-key $LOG_ANALYTICS_WORKSPACE_CLIENT_SECRET \

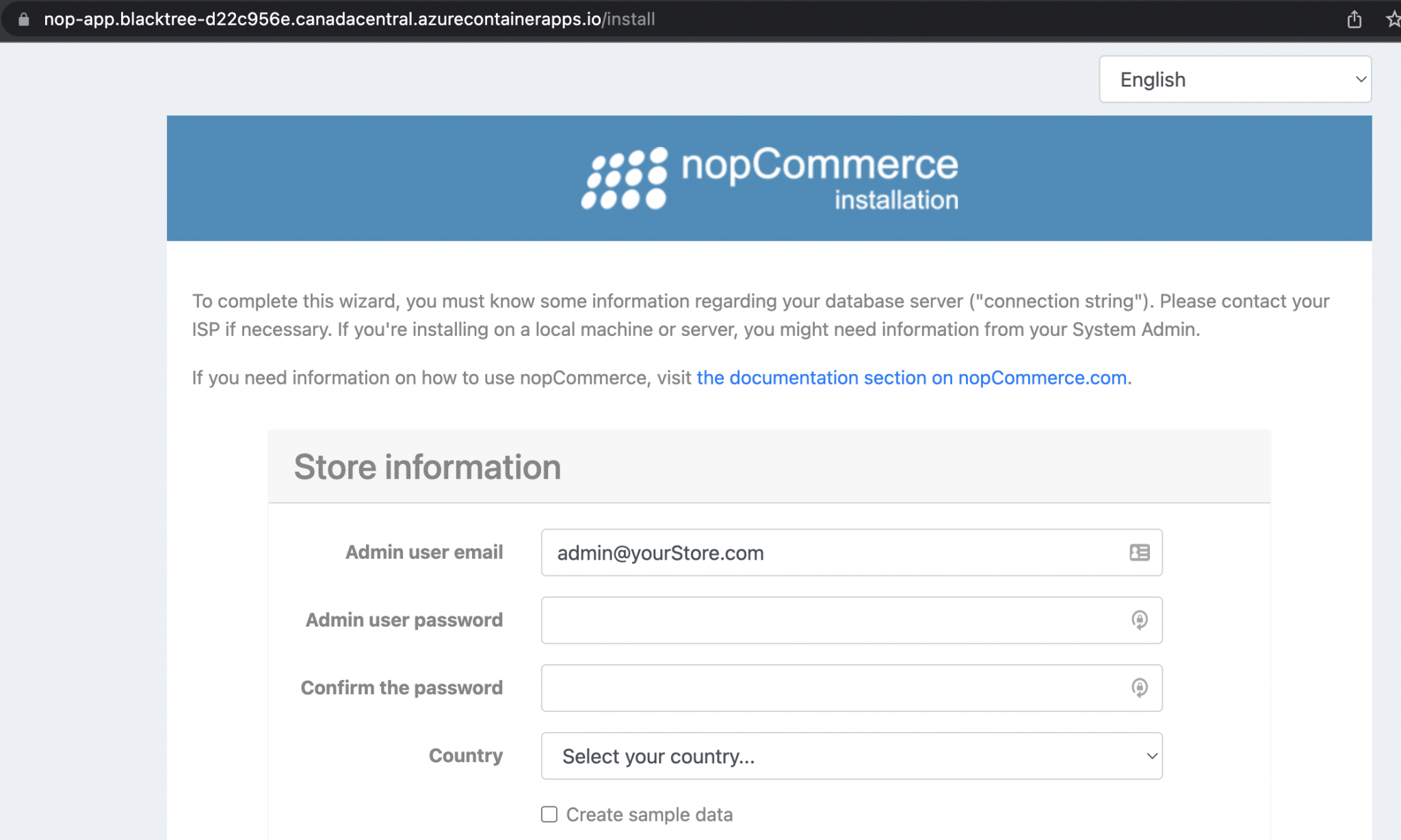

--location canadacentralNow that we have our Container Apps Environment we can easily run a container. Rather than make our own image we can use something already built, in this case I’m going to use the docker images of nopcommerce (which is a .net eCommerce platform). You can see the image here: https://hub.docker.com/r/nopcommerceteam/nopcommerce:latest

az containerapp create \

--name nop-app \

--resource-group XAMInternalProjects \

--environment containerappsdemo \

--image docker.io/nopcommerceteam/nopcommerce \

--target-port 80 \

--ingress 'external' \

--query configuration.ingress.fqdnAfter we run our command we get the public location of the running container apps and can easily browse and see this running.

Running multiple containers and multiple technologies

Now that we have our environment we can easily run more containers. We already know with containers we get this for free but for fun let’s use multiple technologies, so why not now run a nodejs application next to our .net app. In this case I’ve also used another pre-built container which is a hello world nodejs app, https://mcr.microsoft.com/azuredocs/aci-helloworld

az containerapp create \

--name nodeapp \

--resource-group InternalProjects \

--environment containerappsdemo \

--image mcr.microsoft.com/azuredocs/aci-helloworld \

--target-port 80 \

--ingress 'external' \

--query configuration.ingress.fqdn

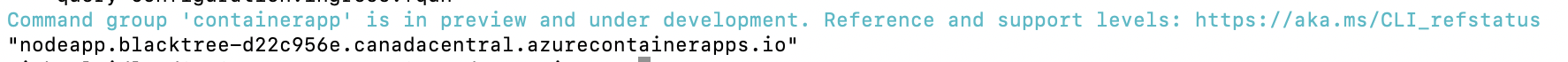

After we run our command we are returned the public url of the Container App, we can see below the url of

If we then open that url in our browser, we can see we’ve got our running node app and with a secure endpoint.

Summary

Azure Containers Apps allows you to build K8s style applications without the hassle of managing K8s. This technology has a huge amount of potential to help all sizes of companies deliver more value to their customers faster and more efficiently, by cutting down on the management/configuration required for these types of applications.

I’m really excited to see Azure Container Apps evolve and I would love to one day use this in a production scenario.